We present Neural 3D Strokes, a novel technique to generate stylized images of a 3D scene at arbitrary novel views from multi-view 2D images. Different from existing methods which apply stylization to trained neural radiance fields at the voxel level, our approach draws inspiration from image-to-painting methods, simulating the progressive painting process of human artwork with vector strokes. We develop a palette of stylized 3D strokes from basic primitives and splines, and consider the 3D scene stylization task as a multi-view reconstruction process based on these 3D stroke primitives. Instead of directly searching for the parameters of these 3D strokes, which would be too costly, we introduce a differentiable renderer that allows optimizing stroke parameters using gradient descent, and propose a training scheme to alleviate the vanishing gradient issue. The extensive evaluation demonstrates that our approach effectively synthesizes 3D scenes with significant geometric and aesthetic stylization while maintaining a consistent appearance across different views. Our method can be further integrated with style loss and image-text contrastive models to extend its applications, including color transfer and text-driven 3D scene drawing.

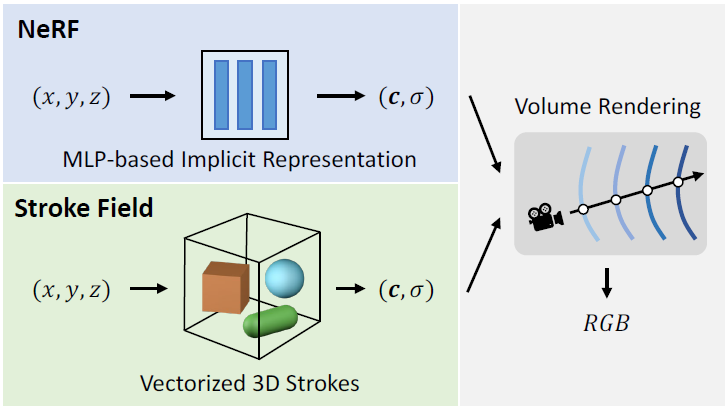

Our method learns a vectorized stroke field instead of MLP-based implicit representation to represent a 3D scene. We construct a palette of 3D strokes based on geometric primitives and spline curves.

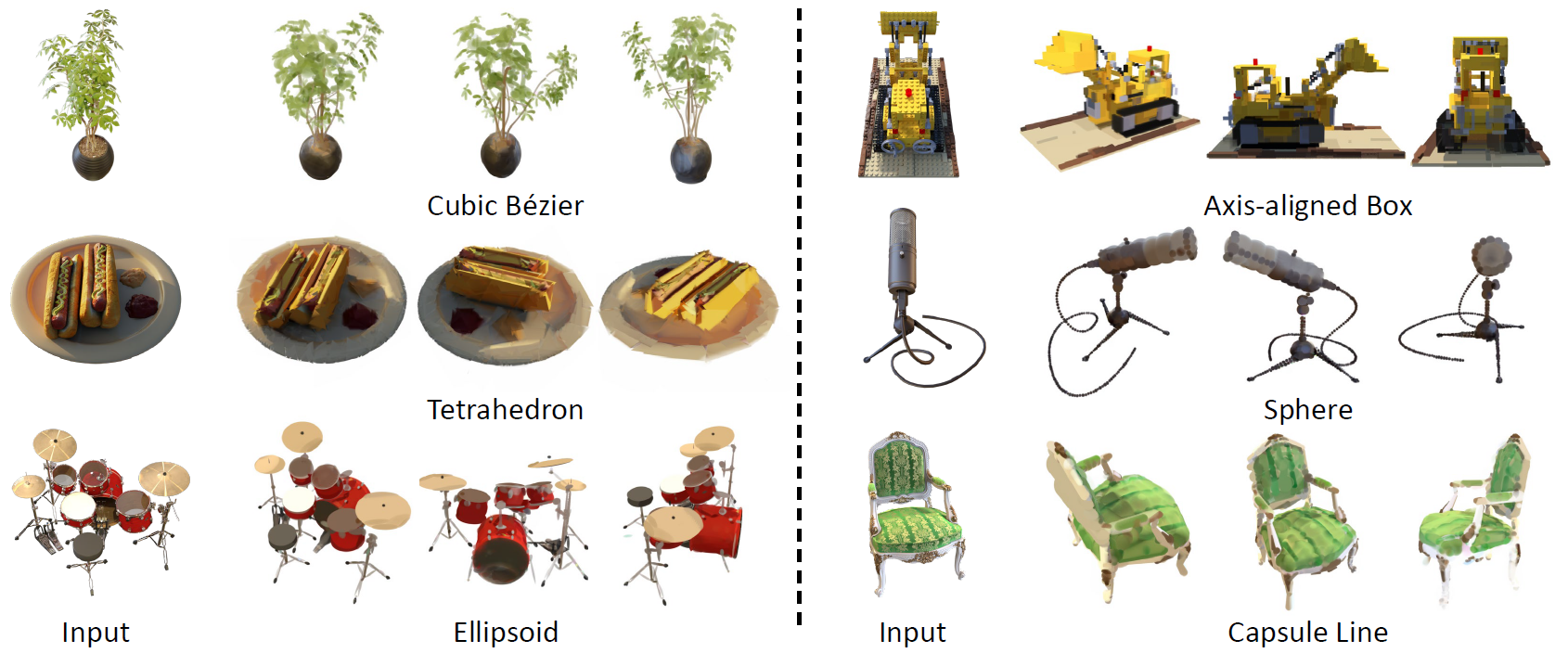

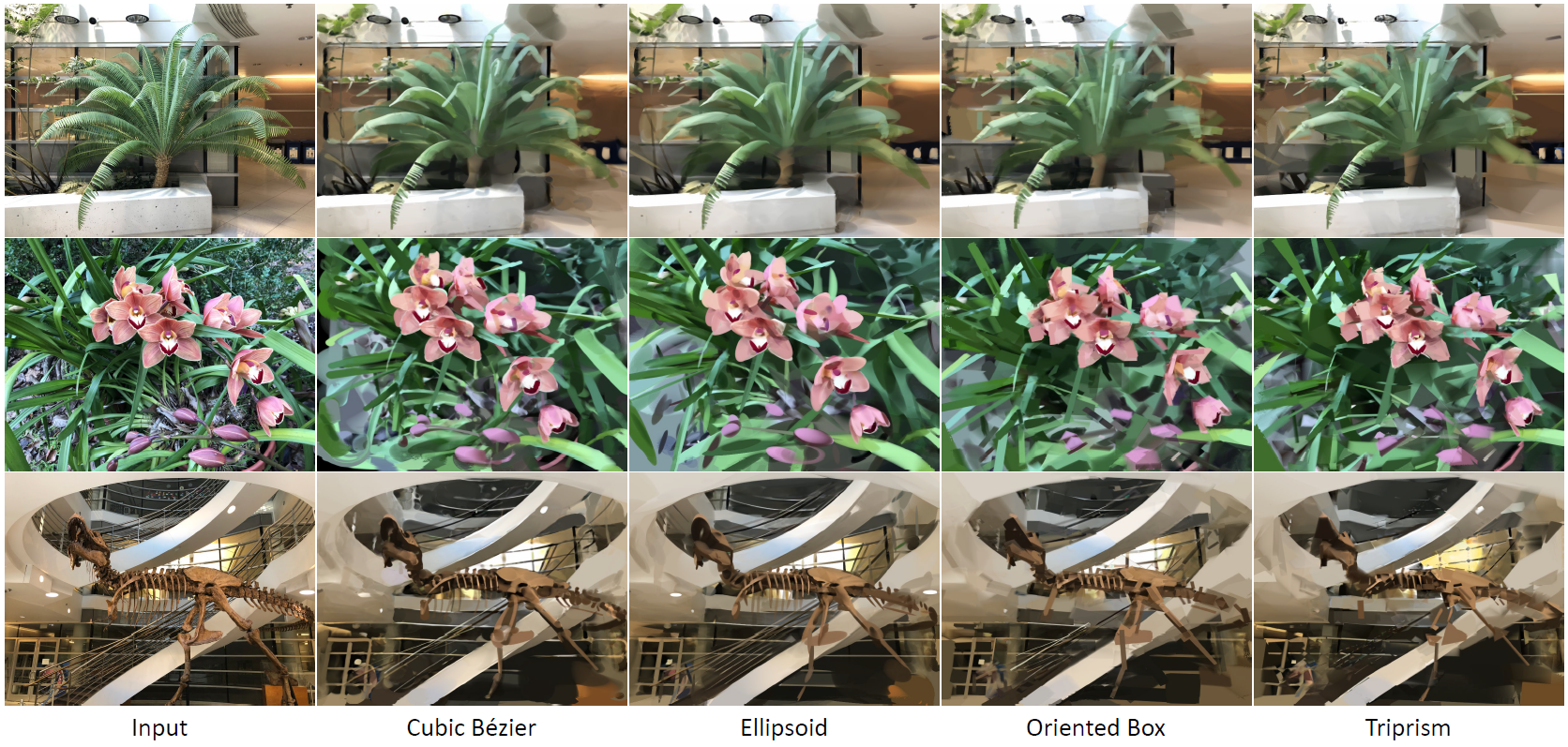

We test our stroke-based 3D scene representation on the Blender and LLFF dataset.

For single object scenes, we use 500 strokes, while for face-forwarding scenes, we use 1000 strokes.

Our vectorized stroke representation is able to recover the 3D scenes with high fidelity while maintaining a strong geometric style defined by the strokes.

We recommend zoom-in to observe the visual details of 3D strokes.

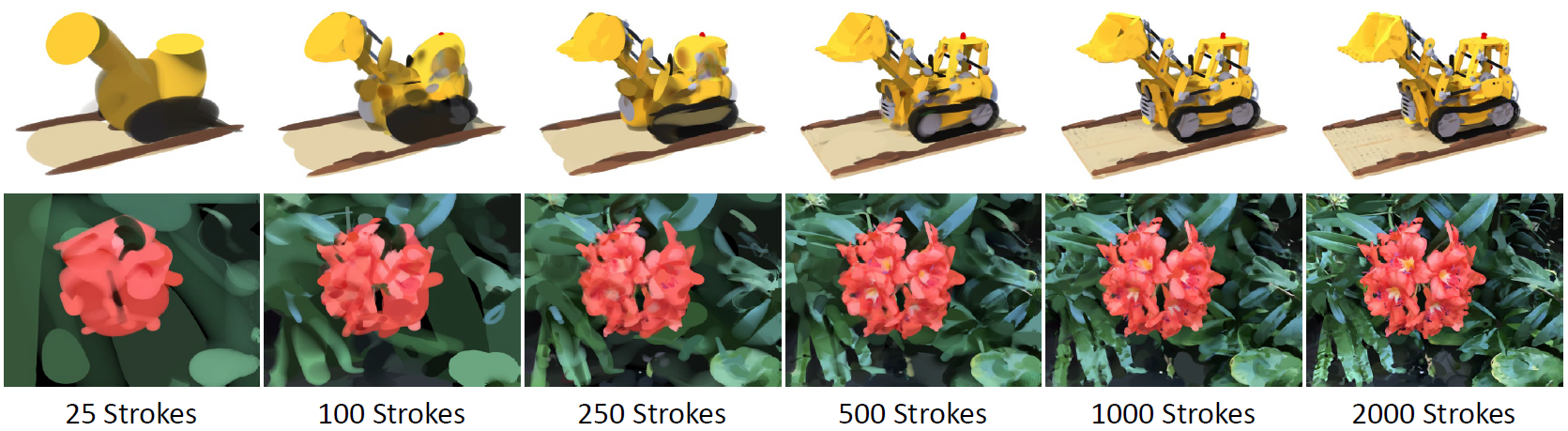

By changing the total number of strokes, our stroke-based representation can capture both the abstraction and fine-grained details of the original scenes.

We demonstrate the novel view synthesis on the stylized scenes based on different 3D strokes.

The stroke-by-stroke painting process by progressive adding strokes into the scenes.

We compare our method with other 2D-based stroke rendering methods. Our method achieves strict multi-view consistency thanks to its inherent 3D representation.

If you find this work useful for your work, please cite us:

@misc{duan2024neural,

title={Neural 3D Strokes: Creating Stylized 3D Scenes with Vectorized 3D Strokes},

author={Hao-Bin Duan and Miao Wang and Yan-Xun Li and Yong-Liang Yang},

year={2024},

eprint={2311.15637},

archivePrefix={arXiv},

primaryClass={cs.CV}

}